You may have noted an increasing activity around quantum technologies in recent years. Giants such as IBM, Microsoft, Google, and Intel are jumping into quantum computing alongside the by now well established D-Wave and more recent startups such as Riggeti, Q-Ctrl, Zapata, etc., etc. The European Union recently launched its €1 billion Quantum Technology Flagship, the US House of Representatives passed a bill to invest more than $1.2 billion in Quantum Information Science, and China is already investing heavily in the sector.

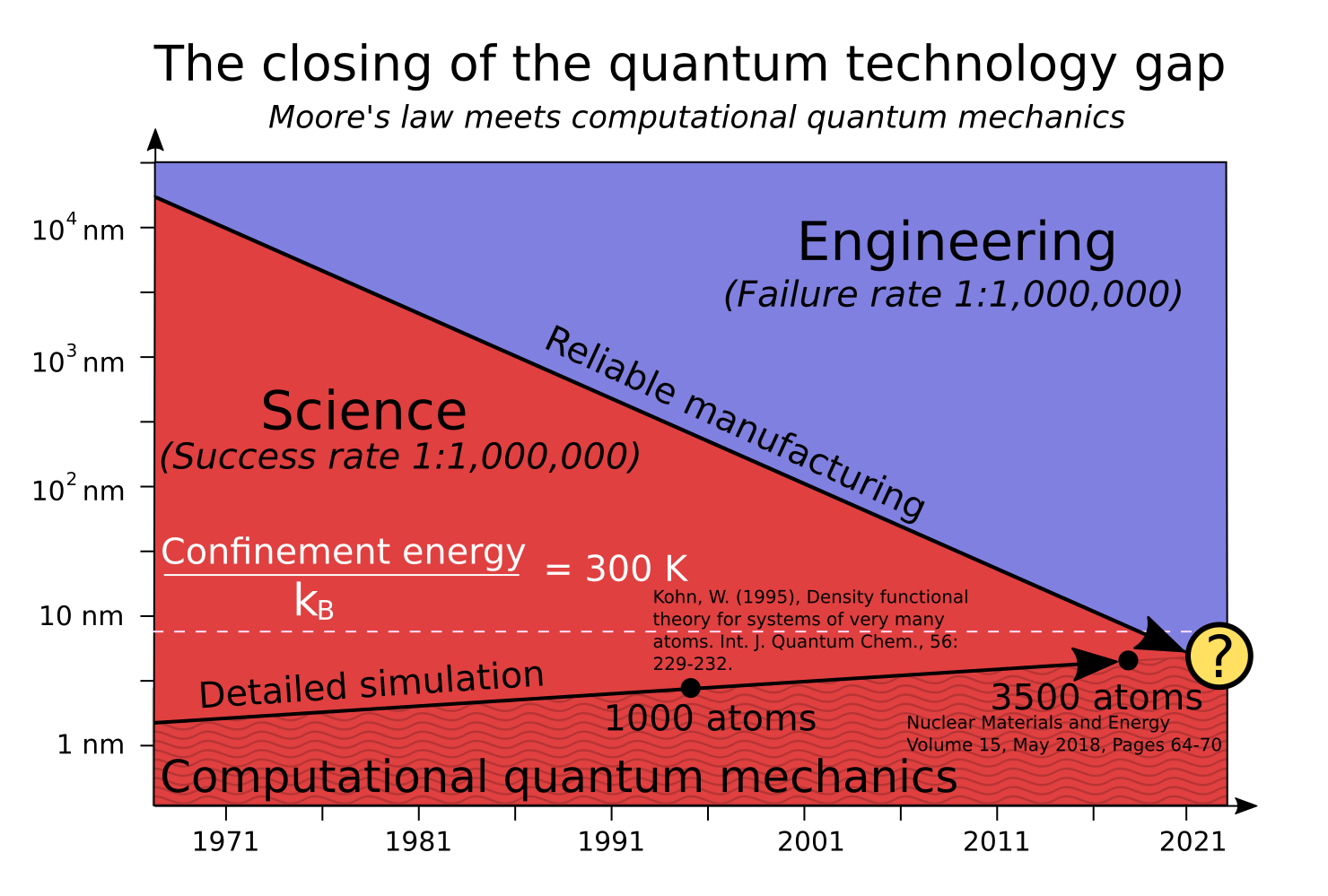

So why does this happen right now? Is fear of missing out driving a hype, or has some fundamental breakthrough suddenly made quantum technologies viable? To understand what is happening, take a look at the figure below.

At the end of Moore’s law.

The difficulties

A lot has been made of the difficulties associated with the end of Moore’s law. For more than half a century, technological progress has been driven by ever smaller and faster computer components. In 1971 the typical precision with which transistors were manufactured was 10 µm. For current technology, the number is 7 nm, and the target 2-3 years from now is 5 nm. This development is indicated through the “reliable manufacturing” line in the figure above. Further miniaturization is difficult as the fundamental limits of the atoms and their lattice structure is reached at around 0.5 nm.

While it is easy to understand that the atomic size is a fundamental limit, things become tricky already before this. The circuit elements stop working as expected when quantum mechanical effects become important. For example, the confinement energy required to trap an electron within a certain volume element becomes comparable to the typical thermal excitation at room temperature below 10 nm. This effect can only be understood with the help of quantum mechanics.

The fundamental limits that are involved here cause great difficulties for the semiconductor industry as we know it, as future performance improvement has to come from other sources than continued miniaturization.

The emergence of quantum engineering

The line of reliable manufacturing can roughly speaking be thought of as the dividing line between engineering and science. The success and failure rate ratios in the figure are certainly tongue in cheek and not intended to imply that scientists are incompetent or that engineering is easy. Rather, it indicates the difference in time scales with which things can be built and tested. Whatever can be reliably manufactured can be tinkered with and improved upon within a limited time frame, while science is a slower process that moves knowledge forward a small step at a time.

Although semi-classical models that take some quantum mechanical effects into account have played an important role in industry for many decades, detailed quantum mechanical models have largely been a subject reserved for scientific study. Especially when it comes to quantum mechanical models that do not rely on some average over a large number of quantum mechanical particles.1 But with the line of reliable manufacturing crossing into the quantum territory, quantum mechanics is bound to become an engineering discipline. No matter if this means in terms of full-fledged application to quantum computing, or simply to squeeze the last bit of juice out of transistor miniaturization.

The opportunities

The field of computational quantum mechanics has as a consequence of improved execution speed brought on by Moore’s law, as well as improved algorithms, continuously increased the number of atoms that can be faithfully modeled quantum mechanically. As an example, two data points are provided in the figure, quoting the scientific literature on the typical sizes accessible for relatively detailed quantum mechanical modeling (DFT). The length scales in the figure indicate the typical side length of a cube containing the given number of atoms.

With computational quantum mechanics and reliable manufacturing lines crossing each other, both manufacturing and simulation can greatly benefit from each other. Component production can benefit from the ability to simulate models that are faithful to the end product. More interestingly, however, the ability to build exactly the system that is being simulated can provide hugely useful feedback to the computational quantum mechanics community. While electrical engineers may scratch their heads over how to keep increasing transistor count, scientists should be celebrating the dawn of a new experimental era.

So far quantum mechanical simulations and experiments have only been proxies to each other. What has been possible to simulate has been challenging to build, and vice versa. Scientific results have therefore had to be filtered through a fair amount of good judgment when being compared to each other and perfect agreement is seldom expected. What part of the mismatch that is due to the imperfection of the model, and what part is due to deficiencies in the actual solution method, has therefore been complicated to disentangle. With the possibility to reliably produce more or less the exact systems that are being simulated, a better feedback loop can be established that can help guide the development of better numerical methods.

The development of better methods can, in turn, feed back on the semiconductor industry to, for example, develop better transistors or metrology equipment. It can also provide additional benefits in other areas such as quantum chemistry, which relies on similar methods but applied to molecules etc.

While quantum computing is the poster boy of quantum technologies, it is also the more uncertain direction since major unsolved questions remain. But the fact that companies are moving in this direction is not just hype. It is a natural and exciting consequence of the end of Moore’s law that may have us welcome it with open arms rather than dread it. Independently of whether quantum computing proves successful or not, it seems inevitable that quantum technology and quantum engineering is about to rise.

- Band structure calculations have for example been essential to understanding semiconductors. But these are based on Bloch’s theorem, which relies on the organization of a large number of atoms into a repeating pattern such that a small number of atoms in a so-called unit cell can be taken as representative of the full system.